This article takes the vertical field of painting as a starting point to introduce how AIGC can optimize the current painting workflow. It aims to inspire thoughts on what AIGC can already achieve in an industry that feels distant to me, what new possibilities it may bring, and what we can do about it.

Simple Principles#

Below, I will briefly explain its principles using diffusion models as an example, based on my understanding.

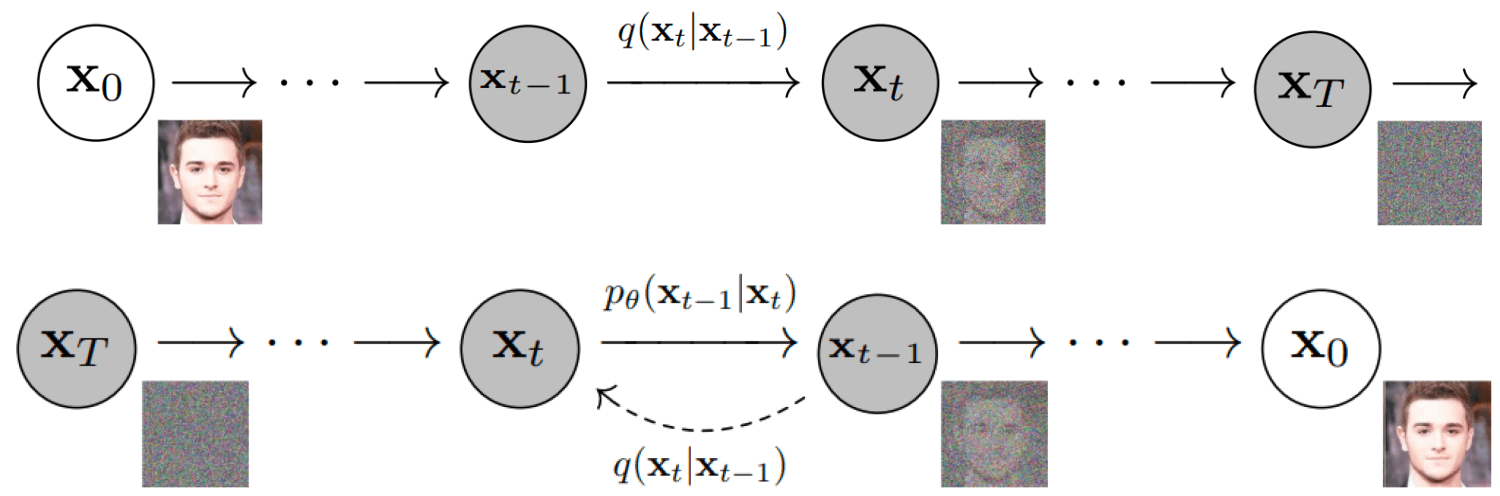

Training Process

We add noise to the original image, gradually turning it into a pure noise image; then we let the AI learn the reverse process, which is how to obtain a high-definition image with information from a noise image. After that, we use conditions (such as descriptive text or images) to control this process, guiding it on how to iterate to denoise and generate specific graphics.

Latent Space

An image with a resolution of 512x512 is a set of 512 * 512 * 3 numbers. If we learn directly from the image, it means the AI has to process data in 786432 dimensions, which requires high computational power and computer performance. Therefore, we need to compress the information, and the compressed space is called "latent space."

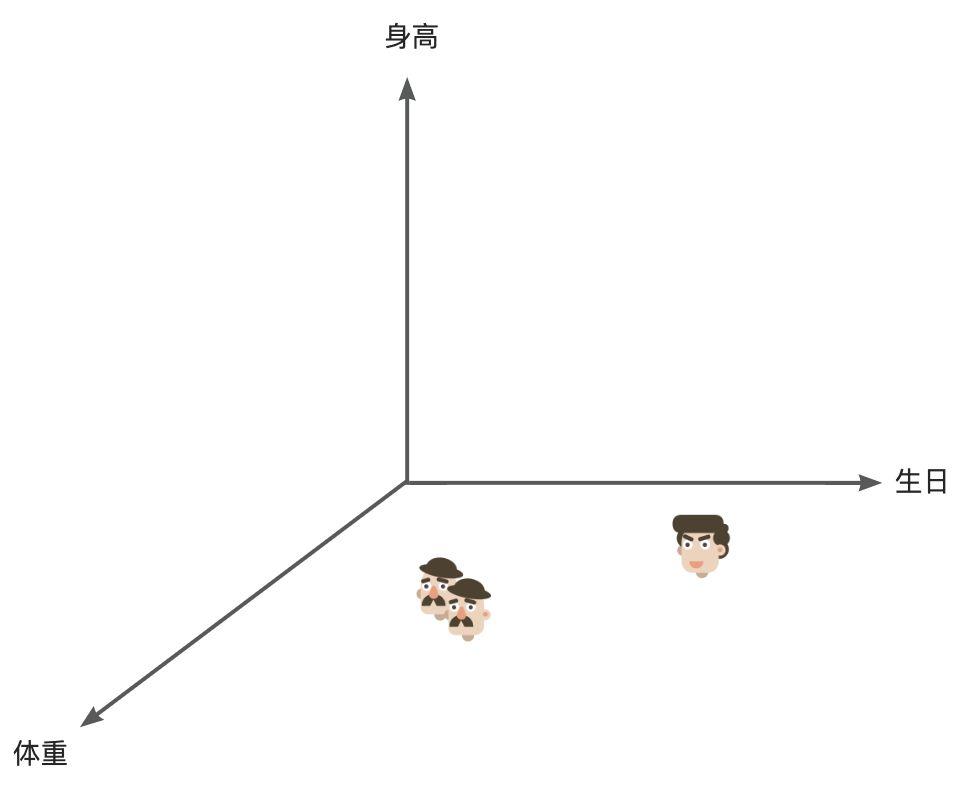

If we have a list of information about 10,000 people and want to find two who might be brothers, if we process each row, we would have to handle 10,000. But if we have a three-dimensional coordinate system representing people's latent space, with the three axes being height, weight, and birthday, we can find two adjacent points in this three-dimensional space. These two adjacent points are likely to represent similar individuals, making it easier for AI to process multi-dimensional information.

Human cognition works similarly; when encountering a new category of things, we subconsciously classify features and label them in multiple dimensions. For example, we can easily distinguish between a chair and a table because they are obviously different in terms of volume.

AI can also do the same thing, compressing a vast dataset into many feature dimensions, transforming it into a much smaller "latent space." Thus, finding an image is like searching for a corresponding coordinate point in such a space and then converting that coordinate point into an image through a series of processes.

CLIP

When using various online AIGC services, we often utilize the text-to-image function. To establish a connection between text and images, AI needs to learn the matching of images and text from a massive amount of "text-image" data. This is what CLIP (Contrastive Language-Image Pre-Training) does.

The overall process can be summarized as:

- The image encoder compresses the image from pixel space (Pixel Space) to a smaller dimension latent space (Latent Space), capturing the essential information of the image;

- Noise is added to the images in latent space, undergoing a diffusion process (Diffusion Process);

- The input description is converted into conditions for the denoising process through the CLIP text encoder (Conditioning);

- Denoising is performed on the image based on certain conditions to obtain the latent representation of the generated image. The denoising steps can flexibly use text, images, and other forms as conditions (text-to-image means text2img, image-to-image means img2img);

- The image decoder generates the final image by converting the image from latent space back to pixel space.

Workflow Example#

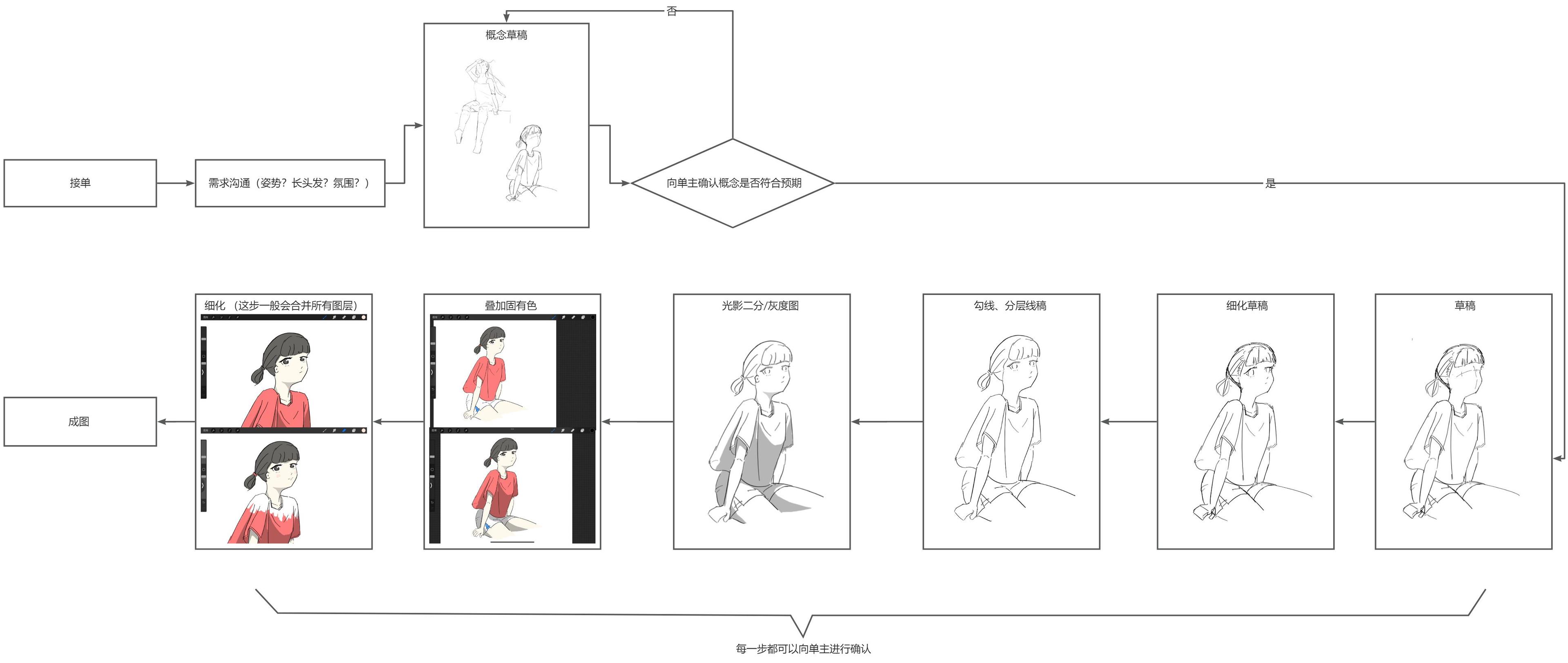

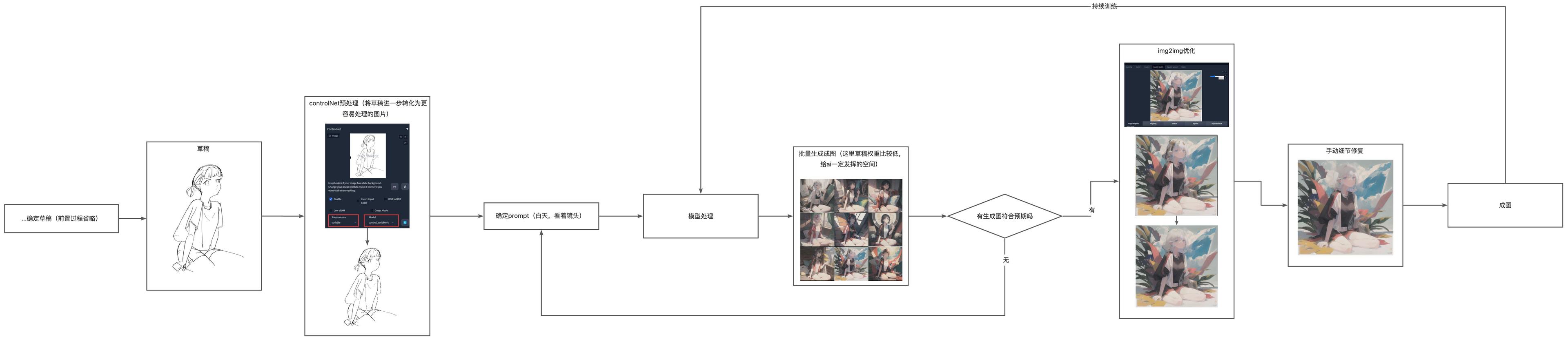

Now let's take a demand as an example: a person wants an illustration of "a girl sitting on the edge of a rooftop looking up at the camera." (If the image is not large enough, you can right-click to open it in a new tab.)

Traditional Workflow#

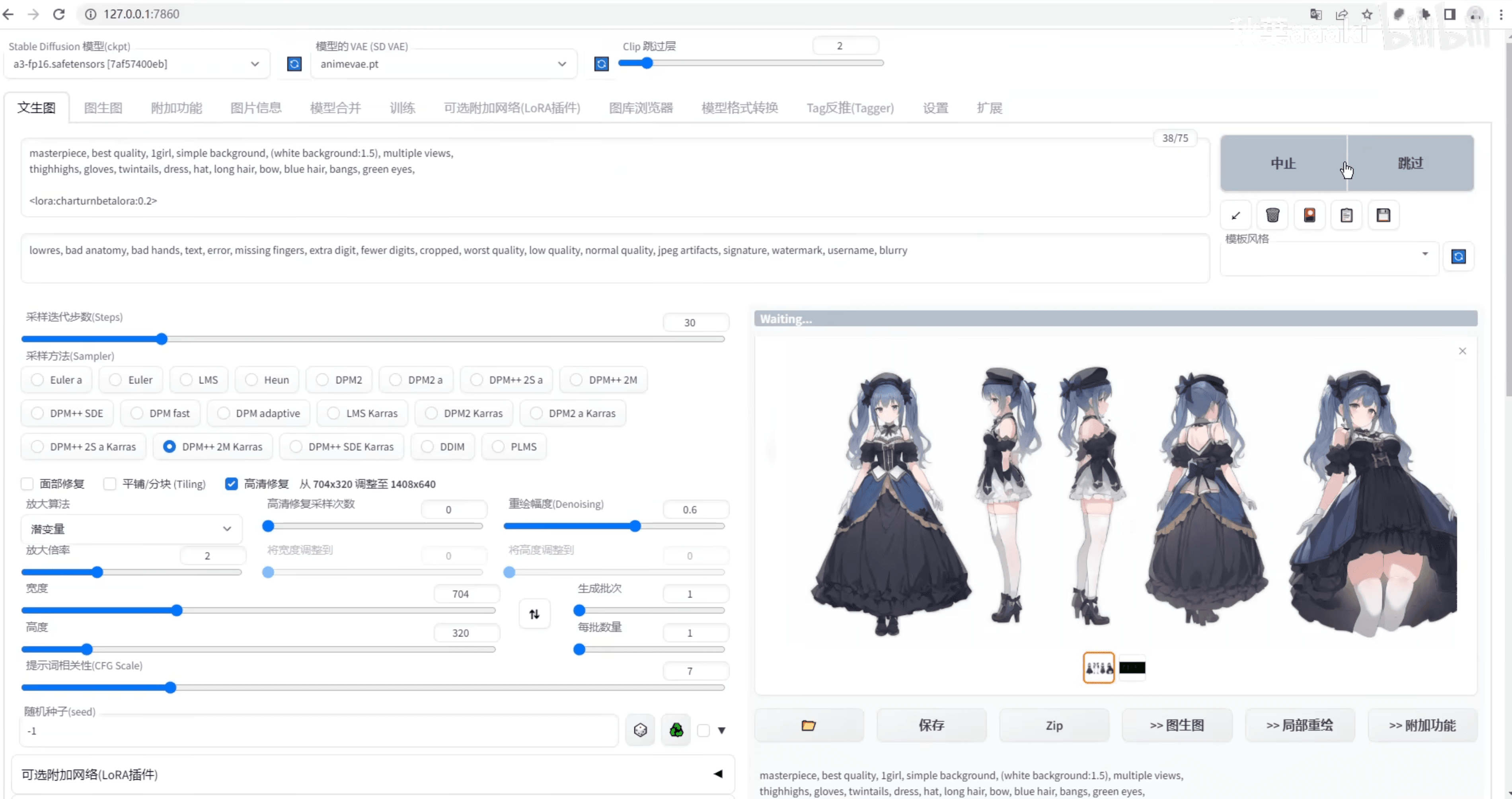

Below are several ideas I have for optimizing workflows using AI, with the tool being the locally deployed AI painting tool, stable-diffusion-webui.

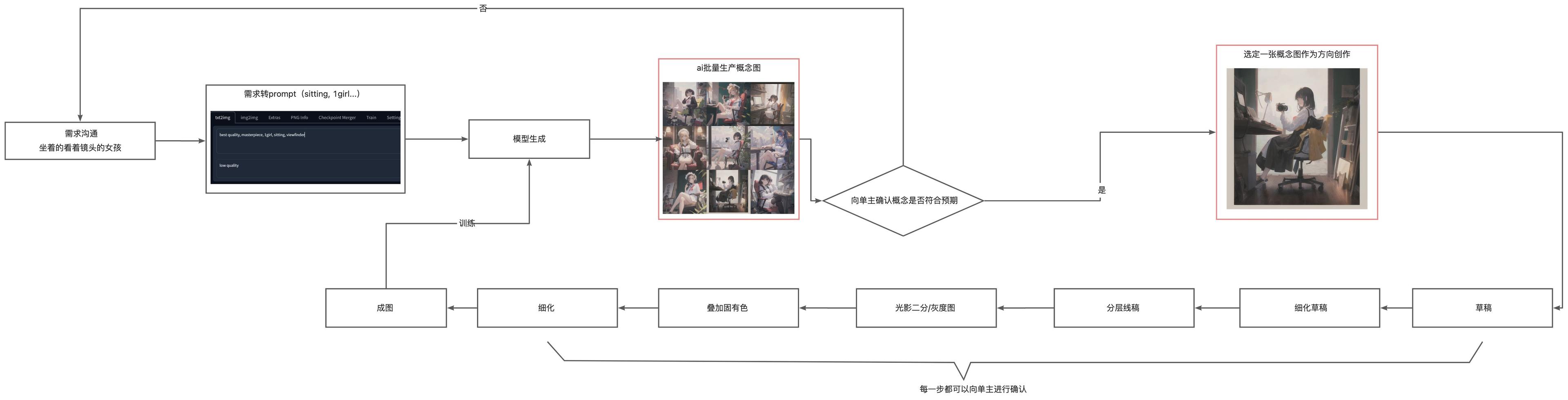

Batch Concept Art Workflow#

During the demand communication and concept draft phase, a large number of concept images can be quickly generated, allowing the client to pre-select an image they want. This is suitable for scenarios where the client's requirements are unclear. Once the direction is determined, we create based on the concept images (not directly modifying AI's images), significantly reducing the chances of rework.

This method still requires the creator to personally create, but since the images are all created by themselves, they can effectively train a model that belongs to their own style during the process, which can be used in future workflows.

Post-AI Workflow#

Using AI-generated images as the result of the workflow, the creator only needs to provide a draft and a rough description prompt, allowing AI to generate images. This method can control the overall framework with the draft, while colors and atmosphere are handled by AI. This is a method I commonly use, achieving a balance between creation and automation.

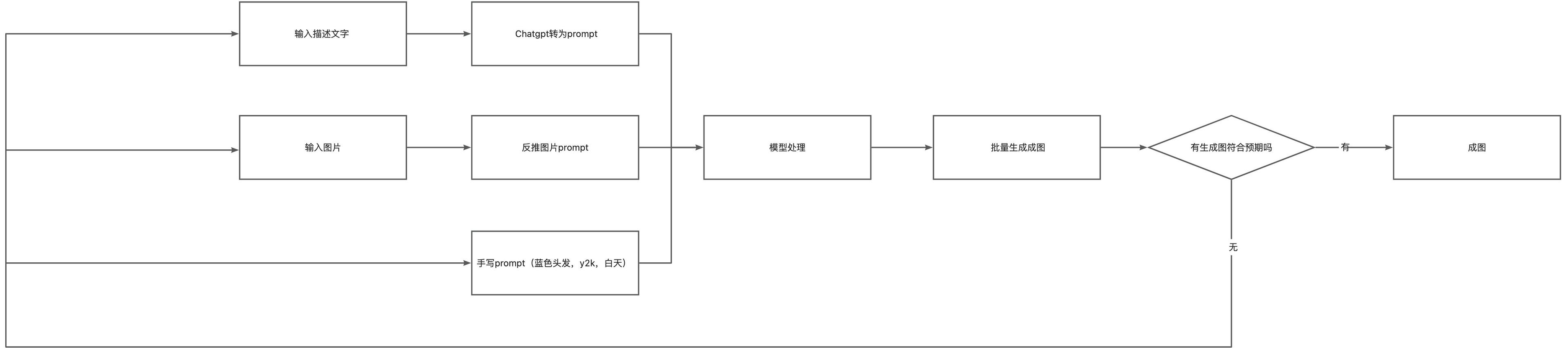

No Human Drawing Workflow#

Another method is to directly generate images from inputs (which can be descriptive text, images, or direct prompts), further enhancing automation. However, I personally do not prefer this method, as it is only suitable for very simple and low-demand tasks, or if your model has already been trained very thoroughly. The automatic delivery AI drawing commonly used in second-hand markets basically employs this method.

The above lists several workflows I have tried before, but these workflows can also be tailored and combined according to the scenario. After continuously training your model with high-quality output images, you can obtain a model with a personal style.

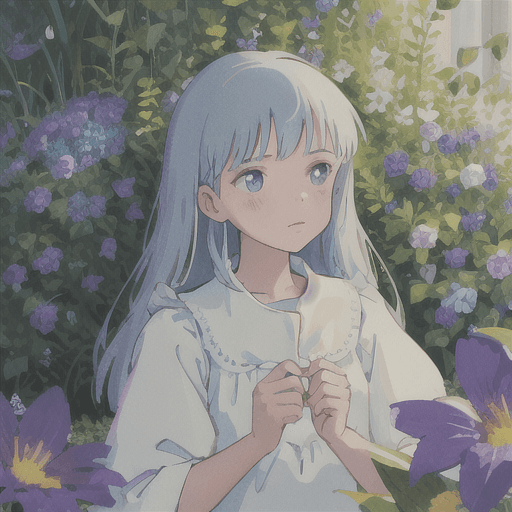

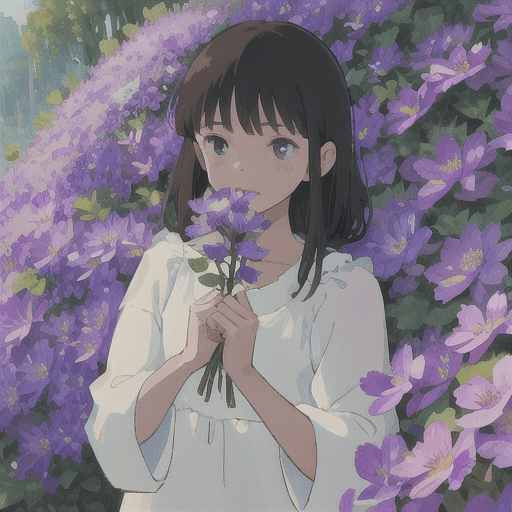

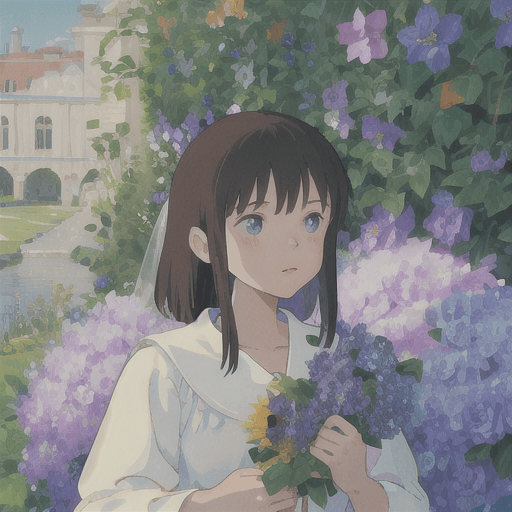

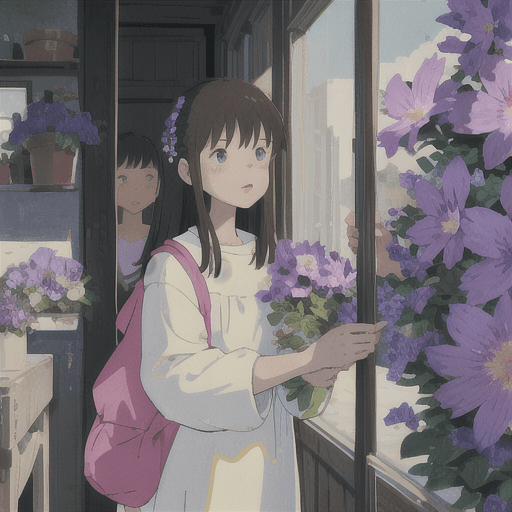

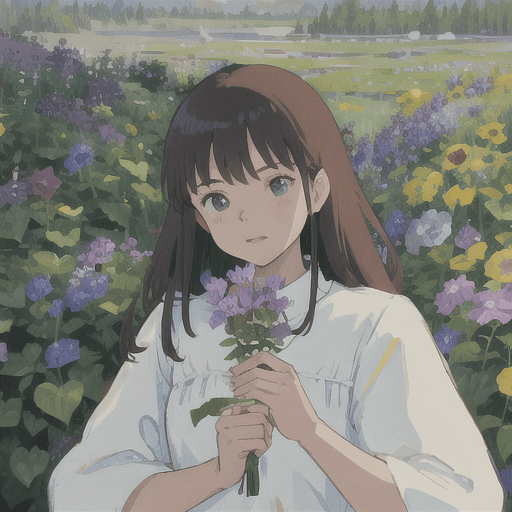

This is a set of LORA models trained in the style of Studio Ghibli, mixed with other base models to produce portraits, along with the prompt "flower":

In addition to these, stable diffusion can also work with other plugins to do many things, such as generating three-view images with one click, serving as a reference for character design in projects lacking professional artistic resources. (Imagine if what you spent 648 on later was this):

※ Currently, the domestic painting community is very sensitive to AI. The interest community Lofter faced a large number of artists deleting their accounts due to supporting AI-generated avatars.

Current Defects#

Difficulty in Secondary Changes#

In traditional digital painting, we layer images. For example, the wings and decorations of a character are drawn on a separate layer, allowing for easy adjustments to the position of the wings during the mid-stage. If we want to create skeletal animations, it is also easier to disassemble parts. However, if the input image is a complete image and the AI outputs a complete image, this mode does not match the traditional layering logic. The manual detail repair workload is significant. If the client requests secondary changes to the positions/shapes of various objects in the illustration, the lack of layering means that each change may affect other objects, and the brush strokes modified manually may not blend well with the AI-generated image, leading to considerable overall costs.

If I provide the line art of the hands and body separately to the AI for processing, aiming to produce layered components, and repeat this process for other components, I would then assemble the AI-processed components like the body and hands together. Processing separately and merging at the end sounds like a more ideal workflow, but giving each component separately to the AI may result in a lack of uniformity among the generated components, making them feel disjointed. For example, the clothing generated for the body may have one style, while the sleeves of the generated arms may differ from the clothing of the body. Since they are processed separately rather than uniformly, the AI cannot effectively relate them.

Lack of Logical Consistency in Products#

In industrial art, not only is the detail of a single image required to meet standards, but the overall consistency of the artistic resources is also necessary. For example, if character A has a fixed logo material, character B in the same series should also have the same object. Logically, these are the same items, but the current models may produce logos for the two characters that differ to some extent. Although the differences may be minor, this is not acceptable logically. If the logo shape has slight variations or additional elements, it will make people feel like they are two different objects, failing to fulfill the role of a "unified object."

If the illustration does not require high detail or is used for brainstorming concept art, AI is very suitable. However, for artistic resources in the rigorous industrial art of gaming, current AI still lacks controllability and secondary creation capabilities.

But given the current pace of evolution, I believe that solutions to the aforementioned problems will emerge in the near future.

The Jenny Loom#

In many discussions among AIGC supporters, the "Jenny Loom" is often brought up as a comparison to current AIGC, suggesting that AIGC is the next Jenny Loom, capable of changing the production relations of current artistic creation, with opponents likened to the "reactionary textile workers" who smashed machines at that time. This perspective seems a bit too social Darwinist (i.e., survival of the fittest). From the perspective of the products produced, the clothing produced by the loom and the artworks produced by artists are two different dimensions. The former focuses more on the output results as a basic necessity of life, while the latter emphasizes the creative process as a spiritual consumer product. When we see Van Gogh's self-portrait, we can recall his tragic life; every texture of paint and every brushstroke is drawn by his own hand. All these elements are integrated into the appreciation experience, which is fundamentally absent from the artworks generated by AIGC in seconds.

In the past, humans were the main subjects of artistic creation, and the subject itself was also part of the creation. The "story" of the creator and the creative process is part of the product. Injecting stories into cheap products is one of the means of upgrading consumption. This attribute will not change with the emergence of AIGC; it raises the minimum level and reduces the socially necessary labor time. However, the rich still have endless money, and the consumption that needs upgrading will still upgrade. It is just that in certain scenarios where the story is not highly valued, such as in the case of cheap decorative paintings, the advantages of AIGC will become very apparent.

The Future#

AI has already had many applications in the creative field, such as Photoshop's cutout and magic selection. However, the reason many people feel impacted now is that they have discovered that AI can directly produce final products, potentially replacing their positions. It is no longer just a tool but an entity that may stand on equal footing with them in terms of work attributes. In some professions I am aware of, such as line assistants in comics and in-betweeners in animation, I personally feel that they will likely be replaced in the future. A screenshot from a comment section, the authenticity of which I cannot guarantee:

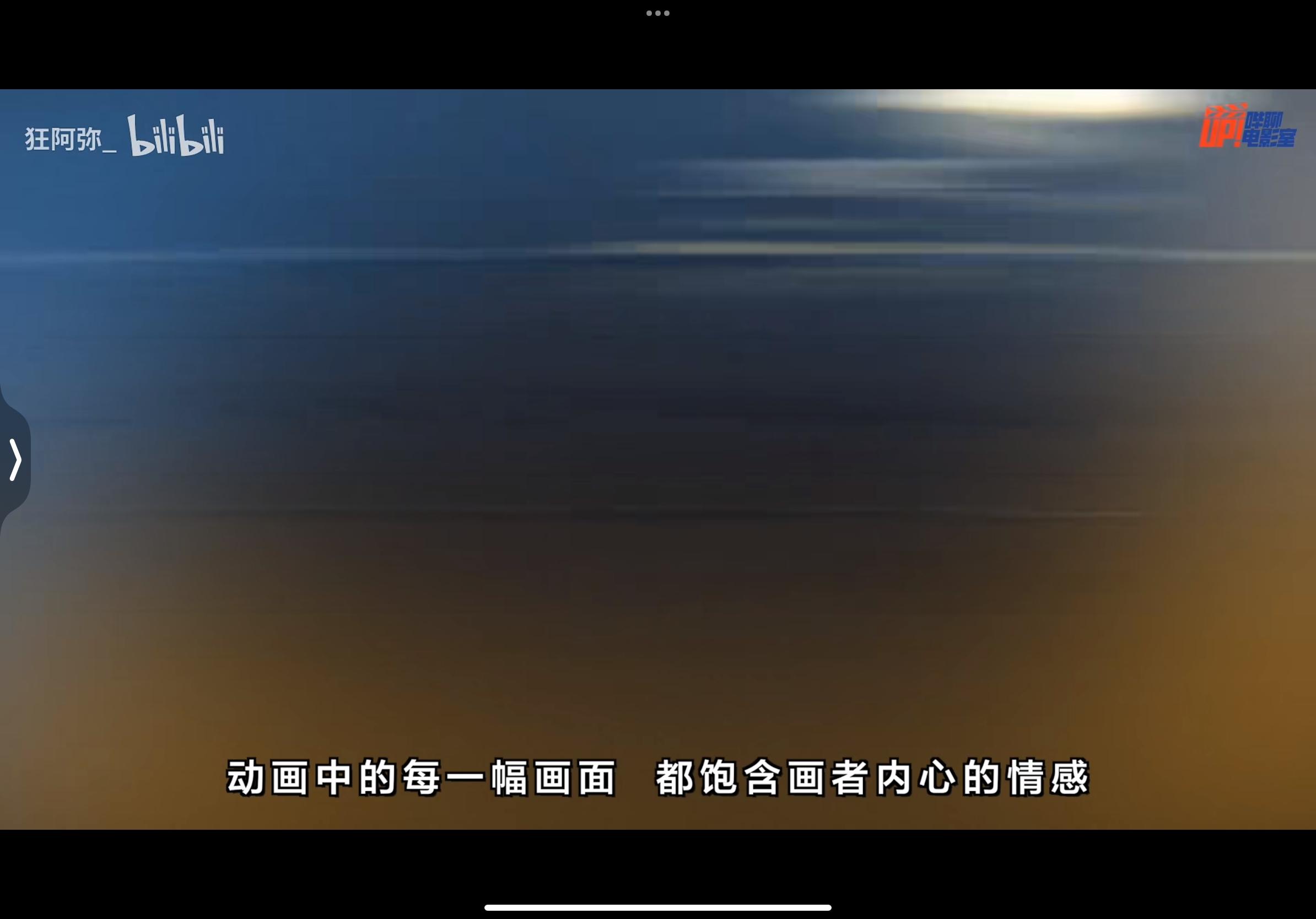

However, I still believe that 2D art, such as animation, should not and cannot be completely replaced by AI. Quoting a segment from an interview with Makoto Shinkai by a Bilibili UP master regarding background images in animation:

In animation, even a single leaf in the background of a frame may have drops of dew added by the creator because it happened to be raining during the creation process.

Every concept you learn, every emotion you experience, and everything you see, hear, smell, taste, or touch includes data about your bodily state. You may not experience your mental life in this way, but that is what happens "behind the scenes."

If AI animation becomes widespread in the future, when you watch an animation, if the early animation is perfect but suddenly one frame generates a logically inconsistent object, and you see it, even if it is just one frame, it will instantly pull you out of the beautiful dream crafted by AI, just like the uncanny valley theory.

Compared to 2D art, 3D creation has a stronger industrial attribute, clearer assembly line layering, and is more compatible with AI. For example, the recently released tool Wonder Studio can replace characters with one click and automatically light them. Its most practical feature is generating intermediate layers for skeletal animation, supporting secondary modifications. Compared to expensive motion capture, this method is much more cost-effective.

But compared to all of the above, AI directly generating photographic works terrifies me even more. A person can create a space that does not belong to any location in this world in just a few seconds, breaking the authenticity and record-keeping nature of photography.

Perhaps by 2030, after the proliferation of AI photography, when you see a landscape photograph, your first thought may not be to marvel at the composition, lighting, mountains, and water, but to question whether it was generated by AI, whether the person who took it was breathing the air there, feeling the sunlight, and lifting the camera under the effects of endorphins generated by the neural signals from various reflections of light hitting the retina at that moment, capturing a moment in time at a specific location in the world. Of course, they could also have ChatGPT assist them in fabricating a story about having gone through these processes to produce this work.

What Can We Do#

As a programmer, I used to fall into a misconception that I had to start from the bottom in a particular field to be considered "beginner." However, current AI has many very convenient upper-level applications. If the foundation is insufficient, starting directly from these commonly used upper-level applications is also a viable option, and then using one's abilities to contribute. For example, if my foundation in AI is very weak, I can train fine-tuned models based on existing services (like OpenAI's tuning model) for more specialized fields; if I only know CRUD, I can call their OpenAPI to create service wrappers; if I only know frontend, I can develop a better UI interface; if I only know how to draw, I can try to optimize my workflow and improve efficiency with the current capabilities. These are all good practices.

Conclusion#

Currently, humans seem to be outperformed by AI in certain specialized fields, and AI's multimodal capabilities have also made significant progress compared to before. However, even now, there are still many black boxes in the human brain and body. The intelligence demonstrated by ChatGPT, powered by GPT-3.5, differs significantly from the actual functioning of the human brain. The efficiency of the human brain remains high compared to machines (for example, training a ChatGPT model based on GPT-3.5 costs between $4.6 million and $5 million). Currently, AI in specialized fields appears to offer better cost-effectiveness than AI in the direction of strong artificial intelligence. In the foreseeable future, we need not worry about being completely replaced.

But just as we need to learn how to interact with people, we will likely need to learn how to communicate and collaborate with AI in the future. We will need to learn how to describe images to Stable Diffusion with more precise prompts, how to ask ChatGPT questions with clearer statements and more reasonable guidance to construct context, in order to obtain the desired results more quickly.

The current multimodal and generalization capabilities of humans are still very powerful, and the unquantifiable empathy, curiosity, and other traits continue to silently drive society forward. I somewhat believe in the "only love and gravity" from Interstellar. Rationally, looking at the sky seems meaningless, but perhaps it was the curiosity of the first ape to gaze at the stars that has brought us to where we are now.